Nano Banana and Flux Kontext Pro: Using Third-Party AI Models in Photoshop

Partner models add powerful new options for Generative AI in Photoshop

Adobe has been developing Photoshop’s Firefly AI engine for some years, and while some of its effects are spectacular its ability to generate images from text prompts has proved lackluster. With Photoshop 2026, Adobe has taken the decision to incorporate two third-party AI tools: Google’s Gemini 2.5 (also known as Nano Banana), and Flux Kontext Pro. The results are quite impressive. Here’s a look.

To get started, make a selection (use Select All for the entire canvas) and choose Generative Fill from the Contextual Taskbar. Select either Google Gemini or Flux from the pop-up menu.

Change the viewpoint

This stock image shows a woman sitting on a sofa, elbow resting on her knee. I gave it the text prompt “turn woman so she is lying back against the sofa with a laptop on her knees”. The result, right, is astonishing: it’s clearly the same person, wearing the same clothes. There are no visual glitches whatsoever.

Colorize an old photo

This photograph of my great-grandfather was taken in the early 1900s. I used the text prompt “make this photograph look as if it was shot with a modern camera in a studio setting, full color, hard sunlight coming from a window, do not change pose or size”. The middle version use the Flux Kontext Pro engine, and has taken some liberties with its interpretation (such as adding stubble and rather too many wrinkles), and some damage in the photo was interpreted as detail in the tie. The image on the right uses the Nano Banana engine, producing results that are not just lifelike but faithful to the original.

It’s not just people

Text prompts can be used to make almost any kind of modification. Here, I gave Photoshop this photograph of a building covered in scaffolding. The prompt was simple: “remove scaffolding”.

The result is an immaculate view of the house, as if the scaffolding had never been there.

Nano Banana vs Firefly

Photoshop’s native Firefly engine is great at removing objects from a scene, but it struggles with complexity. Here’s a view of a street in France, full of people.

To remove the people using Firefly involved making automatic selections of all the bodies, then using Generative Fill to remove them. The results are acceptable at first glance, but what are those disembodied heads in the distance? And is that really a bicycle on the left? Why is the top of the post to its right rotated 90 degrees? Many other small details fail on closer inspection (posts on the sidewalk, brick pattern in the street, the plexiglas door on the little library, etc.)

Nano Banana, by contrast, took the text prompt “remove people” and did a near-perfect job, including the arm on the right. The bicycles are accurate, the road surface believable. But why did it add a woman walking towards us in the distance? And it can’t figure out where the bottom of the plexiglas door should be. Still, it’s a very impressive result.

This article was last modified on October 28, 2025

This article was first published on October 28, 2025

Commenting is easier and faster when you're logged in!

Recommended for you

Free Webinar: Photoshop + AI—Smarter Tools, Better Results

Learn how to use Photoshop’s AI tools to speed up your editing process and...

Changing the Apron Color in Photoshop

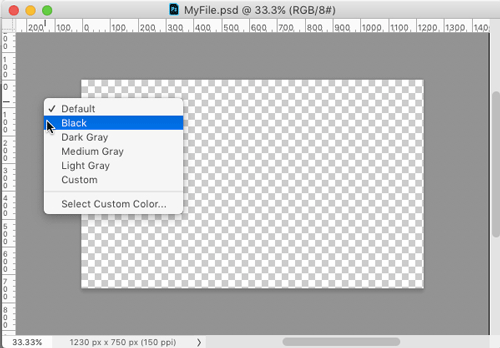

I know what you’re thinking. What the heck is the “apron” in P...

Free Webinar: Unlocking the Potential of AI in Video

Discover how AI in video can elevate your creative output while streamlining you...