What’s New in Creative Cloud 2024

Learn how Adobe is using generative AI to transform Creative Cloud

This article appears in Issue 25 of CreativePro Magazine.

A half century ago, Arthur C. Clarke famously said, “Any sufficiently advanced technology is indistinguishable from magic.” Many of the technology demos at the 2023 Adobe MAX conference could pass for some form of magic, but they’re really just sufficiently advanced technology.

Crowd-pleasing magic is one thing; practical workflow enhancements are another. The real question is, will any of the sorcery shown at MAX translate into capabilities that can improve your creativity and production workflow? That’s what we’ll explore in this article.

Change of Pace

If you’ve followed Adobe MAX in recent years, you may have noticed something different in September 2023: A major Photoshop upgrade appeared several weeks in advance of the annual global conference. Adobe acknowledged that they are no longer tying the Creative Cloud upgrade cycle to the Adobe MAX Day 1 announcements. Similarly, a major new AI-powered feature in Lightroom Classic, Denoise, was released back in April with the 12.5 release.

The technology driving many of the new features in Creative Cloud 2024 apps is generative AI. At Adobe, generative AI is collectively called Adobe Firefly technology, and it is being applied through web and mobile apps as well as the core Creative Cloud desktop applications. Let’s start with a look at those.

Photoshop

Generative AI brings more benefits to Photoshop 2024 (version 25) than any other major Adobe application. But Photoshop added other useful enhancements too. The scope of the changes is wide and deep enough that you should review some of your Photoshop habits and workflows to see if there are better options now.

Generative AI, based on Adobe Firefly

If you read Micah Burke’s article in issue 21 of CreativePro Magazine, you know that using

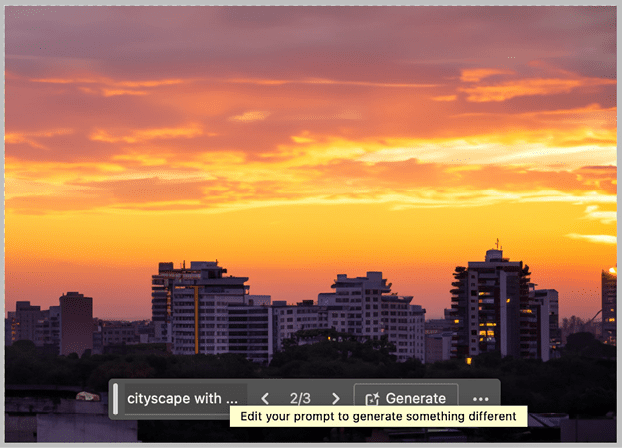

generative AI involves typing a text description of what you want (called a prompt), and then the service generates a corresponding image from the huge data sets it was “trained” on. For example, you can ask for cityscape with mountains at sunset, and generative AI renders that image (Figure 1). It’s better at some subjects than others.

Figure 1. A text prompt creates a new image, using Photoshop’s Generative Fill feature.

Generative AI is also unlike other new features because of unique issues related to copyright, disclosure of AI use, and compensation for creators whose works were used to train generative AI. I discuss these and other generative AI issues affecting Adobe applications later in this article (see “Creative Cloud: The Firefly Generation” below).

Production-friendly AI

Many demos of generative AI show whole images being created as a single layer of pixels. But in real life, you’re probably going to want to adjust certain elements, remove others, or combine them with other images. One way that Photoshop facilitates these tasks is by putting the results on a separate layer, typically with a mask. Another way is by generating variations, so you don’t have to take what you get: You can choose a different variation or generate more variations (Figure 2).

Figure 2. A Generative Fill result in Photoshop appears as a separate layer in the Layers panel, and with selectable Variations in the Properties panel.

In Photoshop, generative AI can also help you repair, enhance, and combine your own existing images. Generative AI appears in Photoshop in different forms, including Generative Fill (for filling a selection), Generative Expand (for enlarging the canvas), and the Remove tool (for painting over an object to replace it with content matching the background).

In some cases, generative AI can do a faster and more effective job than some traditional Photoshop tools and techniques. For example, the new Remove tool frequently (but not always) retouches and repairs images more effectively than the older healing, cloning, and content-aware tools. If you aren’t already using generative AI features as routine production tools, it’s worth giving them a try.

Photoshop also tries to anticipate what you need. For example, if you select part of a photo and just want to convincingly delete what you selected, you don’t have to type a description—just click Generate.

Contextual Task Bar

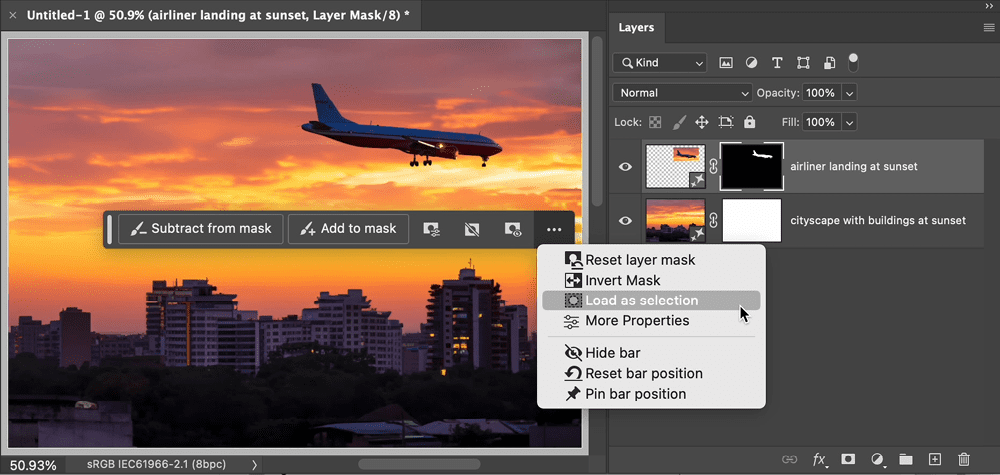

You may have been surprised by a small new panel that likes to stick close to your work: the Contextual Task Bar. You might be thinking: Aren’t the Options bar and Properties panel already contextual? Indeed, they are. But the Options bar is specific to the current tool, and the Properties panel is specific to the current selection. The Contextual Task Bar is specific to the current task, so it can offer options and suggest actions that may not have a place in the Options bar or Properties panel (Figure 3).

Figure 3. The Contextual Task Bar doesn’t add anything new to masking, but it does make visible some traditional masking options that many Photoshop users may not have noticed.

Some people see the Contextual Task Bar as an unwelcome intrusion, and they want to push it away (you can hide/show it by choosing Window > Contextual Task Bar). But I suggest giving it a chance, as it will undoubtedly become the hub of new workflows.

For example, when using Generative Fill, clicking the Contextual Task Bar to enter your prompt is a lot faster and easier than going up to the menu to choose Edit > Generative Fill. The more time you spend with the Contextual Task Bar, the more you discover that it surfaces features and options that were always there but previously not very visible. It can help you discover time-saving options that in the past might have been known only by power users who poked into all the nooks and crannies of Photoshop.

An upgraded Gradient tool

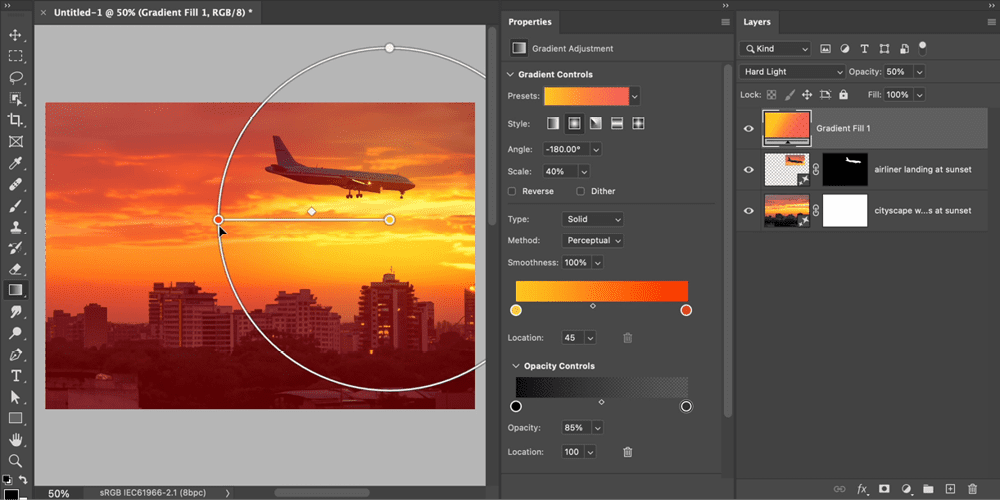

Starting several versions ago, Photoshop made it possible to apply gradients as a fill layer or layer effect so you could easily edit the gradient later. The last missing piece was the Gradient tool. Through Photoshop 2023 (version 24), the Gradient tool, originally built to create pixel gradients, could not edit a gradient fill layer. Adobe finally addressed that issue, starting in Photoshop 24.5.

Many experienced Photoshop users have been confused about how to edit the new gradients because the controls appear in unfamiliar locations such as the Properties panel.

So, when editing a gradient fill layer, keep the Properties panel open because it shows gradient fill layer editing options, which were hidden away in a stack of modal dialog boxes in older versions of Photoshop. If you want to see the new on-canvas controls for directly manipulating gradient angle and color stops, make sure the Gradient tool is selected (Figure 4).

Figure 4. To edit a gradient fill layer, keep the Properties panel open and select the Gradient tool.

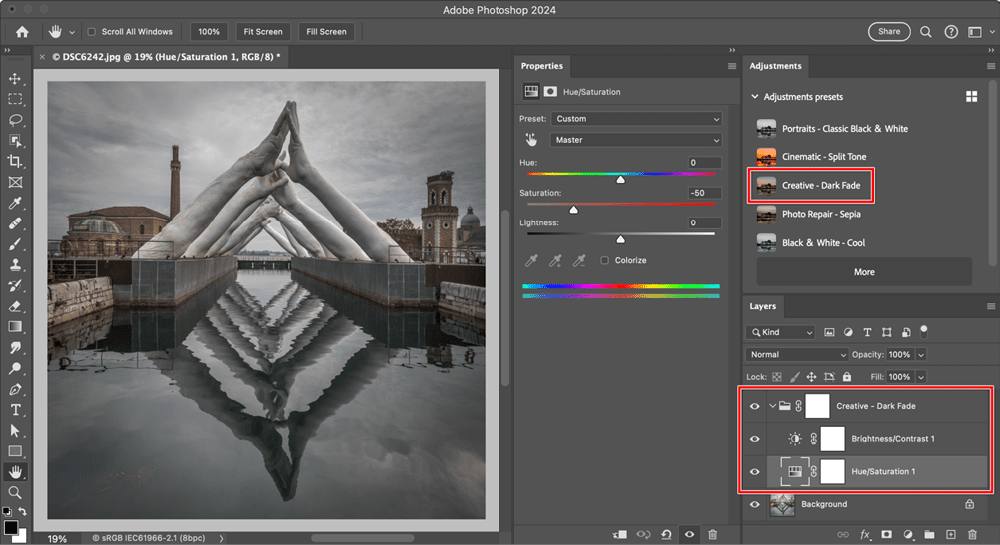

Adjustments presets

The Adjustments panel now includes presets, which are adjustment layer groups designed to apply useful effects. You might know that some individual adjustment layers, such as Curves and Levels, already offer presets. The difference is that the new adjustments presets typically combine multiple adjustment layers, often with blending modes (Figure 5).

Figure 5. Adjustments presets now appear first in the Adjustments panel, so they can be in the way if you frequently use the standard adjustments, which you might now have to scroll down to reach.

For more details, see the Photoshop 2024 announcement.

Adobe Camera Raw, Lightroom Classic, and Lightroom

Editors of camera raw images got their own AI boost in April, with the addition of the powerfully effective AI Denoise feature. At MAX, Adobe announced Point Color, a valuable addition that offers more precise and flexible selective color editing than the existing HSL color panel because you can set separate hue, saturation, and luminance ranges for each color you want to alter (Figure 6).

Figure 6. Point Color provides great flexibility for nuanced color adjustments.

HDR Editing (not the same as the existing HDR Merge feature) provides control over the extended HDR highlight range of tones, and it looks spectacular. But it requires a display that can render HDR levels of luminance (over 1000 nits peak, such as on the 14″/16″ Apple MacBook Pro display). It also can’t be reproduced in print. And for now, viewing on websites and mobile devices is further constrained by limited support for HDR-compatible file formats in web browsers and social media (Figure 7).

Figure 6. Point Color provides great flexibility for nuanced color adjustments.

Lens Blur, currently offered as an Early Access beta, uses AI to simulate the look of narrow depth of field, by combining subject recognition with depth-aware background blur. If the image doesn’t already include a depth map recorded by the camera, AI can analyze the image to work out a synthetic depth map. Purists will say it’s much better to use the right combination of camera sensor, lens, and technique, and technically they’re right. But not everyone has the time, money, and circumstances to produce shallow depth of field in all situations. Lens Blur holds promise for improving background blur for the millions of images captured under conditions where shallower depth of field was not practical or possible.

For more information, including feature availability on specific desktop and mobile platforms, see this post.

Illustrator

The Contextual Task Bar also appears in Illustrator, suggesting that it may become a standard element in more Creative Cloud applications. Also, there’s a new Search field and filter option in the Layers panel so you can more easily find a layer type or name.

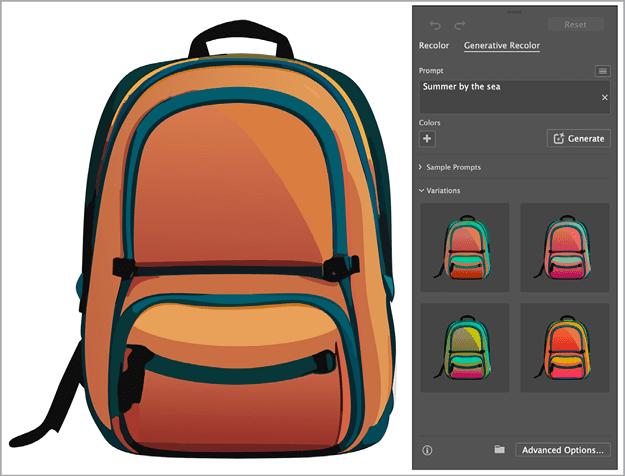

Generative AI (of course)

Illustrator gets its own dose of Firefly-powered generative AI. Generative Recolor helps you iterate artwork through coherent, harmonious color schemes more quickly than with the existing Recolor Artwork command (Figure 8).

Figure 8. Generative Recolor produces colorways faster than you can.

A few more features also use Firefly, but they’re currently in beta, so consider that before relying on them in production.

Text to Vector is the most obvious one: Type a description, and Illustrator generates your graphic as vector artwork that you can edit. Early reviews, such as this one by Von Glitschka, have been less than enthusiastic.

Retype applies AI to font and character recognition. If you have an image of text, such as a photo of a sign or label, This feature identifies the font or a close match, and if you apply it, it recreates that text as a new type layer that you can edit.

Mockup lets you simulate how your Illustrator artwork might look on a product such as a package or a T-shirt. When CreativePro gave it a try back in August, results were mixed.

For more information about the Illustrator announcements, see this post.

InDesign

InDesign has a lot of fans at CreativePro who have unfortunately gotten used to disappointment around Adobe MAX time, as they see other applications get significant new features while InDesign gets only a few minor tweaks. That held true again in 2023. But there are a few things worth mentioning.

Auto Style graduated from a technology preview (see my Adobe MAX 2022 article) to a real feature. With Auto Style, you can design your own style packs and quickly format the text in a document. Bart Van de Wiele showed how to get the most out of style packs in this video from CreativePro Week.

Other enhancements include the ability to hide spreads and to add a descriptive suffix to the filenames of JPEG and PNG images you export from InDesign. Rendering of text in Indic and Middle East and North Africa (MENA) languages improves with the addition of HarfBuzz as the default text-shaping engine in the World Ready Composer. And, now you can search text in documents you share via Publish Online. Analytics are going away, but you can instead use Google Analytics because Publish Online can now integrate a Google Measurement ID.

For more information, see this post.

Bridge

Last year, the rollout of Bridge 2023 was a rocky one for many users, because of changes to the user interface, including the loss of support for multiple windows. (Bridge 2023 could have multiple floating Content panels, but that was not the same thing.)

In response to user feedback, several issues were addressed in Bridge 2024, including the return of multiple windows. You can now customize Bridge keyboard shortcuts, using the excellent visual keyboard shortcut customizer from the Adobe video apps (it’s better than the one in Photoshop and other Adobe graphics apps).

For more information and other enhancements, see this post.

Adobe Express

At last year’s MAX, Adobe Express was offered as an answer to Canva, which was eating up market share for entry-level/quick-solution graphics applications. During 2023, Express and Canva each raced to add better features than the other, including incorporating AI to further accelerate design tasks.

Adobe Firefly powers new Express features such as Generative Fill, Text to Image (type a description to have AI generate an image), and AI text effects. A beta Text to Template feature is similar to Text to Image, but it lets you skip the chore of browsing through templates by letting you type a description of what you want and then generating it for you—with variations.

Since MAX 2022, Express has also added easier video creation and automatic language translation. Express is increasingly able to differentiate itself from Canva through the power of Adobe Firefly and also through the ability to use direct connections between Express and other Adobe applications and services such as Photoshop, Illustrator, Adobe Stock, Adobe Fonts, and Adobe Color (Figure 9).

Figure 9. Describe a text effect, and Adobe Express will generate and apply it.

Express has matured into an important Creative Cloud component that Adobe clearly believes in. I think Express is important as a great starting point for new users. No longer is it necessary to jump into the deep water of expensive, high-learning-curve apps such as Photoshop, Illustrator, and Premiere Pro just to create some simple marketing materials that need to work easily across a range of social media outlets. There is a large set of users whose needs are met by the expanding Express feature set and for whom Express is a much more relevant starting point for graphic design than, for example, Photoshop Elements.

For more information and other enhancements, see this post.

Peak Sneaks

One of the most exciting parts of Adobe MAX are the Sneaks, where Adobe previews technology still in progress. Some Sneaks from past MAX years have become useful working features. I was particularly struck by this year’s Sneaks, because most were astonishing—and maybe even a little scary. You can watch the videos using the link at the end of this section. To watch the Sneaks videos, see this post.

Here are some highlights from the 2023 MAX Sneaks:

Project Fast Fill uses generative AI to replace content in a video, and the new content follows the changing volume, surface, and lighting it’s applied to. For example, you can add a tie to a shirt, and the new tie will continually reshape itself to fit how the person’s body changes as they walk.

Project Draw & Delight lets you skip the task of figuring out a text prompt to get generative AI to create the vector artwork you want. Instead, you just scribble some sketchy strokes to indicate what colors and shapes you want the new artwork to have, such as a cat in a particular pose.

Project Neo lets you quickly create 3D artwork without the typically high learning curve of most 3D apps. It offers simpler ways to build 3D scenes, using tools that are likely to be intuitive to an Illustrator user, for example. This may have been the least surprising demo because many of the tools and techniques are already available in other 3D apps. However, it would fill in a missing piece of 3D in Creative Cloud: quick, entry-level 3D modeling.

Project Scene Change uses AI to not only composite two videos but also reconcile their 3D perspectives. The presenter showed a video of a person walking around a room and another video looking around some objects on a table. The resulting combined video showed the person (resized) convincingly walking around and even behind the objects on the table. It’s fascinating how the technology analyzes each 2D video to work out 3D attributes such as shape boundaries, volumes, and changing point of view. Not only that, but the resulting video shows a different 3D perspective than either of the source videos! The demo also indicates how AI may soon remove the remaining barriers to radically reworking a video as easily as you can rework a still photo.

Project Primrose earned an enthusiastic audience reaction to the idea of wearable technology. Adobe research scientist Christine Dierk led the demo while wearing a dress she made, and then the dress began to change its color and pattern as the audience watched. The dress uses a special material that can display patterns and animations and is interactive, responding to how she moved.

For typography, there’s Project Glyph Ease. Let’s say you have a layer of display type in Illustrator, but no font on your computer offers the look you want. You draw three type characters as a style reference, even on paper, and the AI generates the remaining characters based on that style. And that’s not all: The type in the AI-generated font is live text, so you can edit it.

Project Poseable lets you skip many 3D figure-posing steps, where you would usually have to position individual limbs and body parts. It saves time by recognizing scene context, physiology, and physics to more quickly pose a figure the way you intended, and also by letting you show it a reference image with a pose you want your 3D figure to match.

Project ResUp is an AI-based upscaler for video, so you can improve video with resolution that’s too low because, for example, it’s cropped significantly or uses an old standard.

Project DubDubDub translates speech audio in video to another language. Maybe you’ve heard that one before, but the difference here is that the result is intended to sound convincingly like the original speaker. So, if it translates a video of you so that you are speaking French, and you don’t know a word of French, the resulting video appears to be you speaking fluent French in what sounds like your own voice.

Project Stardust offers a more advanced and automated form of object removal from images.

Project See Through was one of the more remarkable demos. It removes reflections, such as on a glass window, and appears to clearly reveal what’s behind the reflections. I can already see this being used to remove reflections from eyeglasses in portraits.

I don’t typically cover Sneaks in this annual article, but this time, I felt that the Sneaks provided a strong indication of how Adobe is exploring generative AI. They want to get it away from pure text input, and it’s clear that Adobe intends to develop specialized AI tools for specific needs in vector graphics, video, 3D, and typography. I believe it’s an indication that what we have seen so far with generative AI might only be the tip of the spear.

Creative Cloud: The Firefly Generation

Adobe MAX made it clear that generative AI can be much more than what’s currently available in Photoshop, Illustrator, and the Firefly web app. However, you might be aware of issues with generative AI, some of which have been discussed in the news. Why doesn’t generative AI always look right? How can we know if images used generative AI? How can I prove I didn’t use generative AI? Doesn’t generative AI assimilate the work of artists without their consent, credit, or compensation? Adobe is working out several ways to address these issues, and the way they’re doing it makes generative imaging much more likely to be acceptable for use in commercial production.

Beyond the text prompt: Hints and guides

The Sneaks showed that Adobe wants to have generative AI produce the content you want more quickly by freeing you from having to craft just the right text description. Which makes sense given that Creative Cloud tools are visually oriented. Look for more ways to guide and refine generative AI, which could include sketches (drawn on screen or scanned on paper), reference art or video, a live camera (for example, to indicate a pose, scene, or color palette), or a microphone (for example, to translate video dialog using your voice).

Show your work: Content Credentials

There’s no reliable way to detect that a random image is authentic. It is possible, however, to include tamper-evident metadata that declares the state of an image, indicating if the image no longer matches that state. That is roughly the goal of the Content Authenticity Initiative, which is backed by news and arts organizations as well as software companies. Adobe supports this initiative with its Content Credentials technology.

Content Credentials support is starting to appear in Adobe apps, although a full end-to-end workflow is not yet complete. If Content Credentials are present in a file, they can be inspected by uploading the file to the Verify service (Figure 10). Adobe has a goal of including Content Credentials in images where Adobe Firefly technology was applied in any form, so that it will be easier to know if you’re looking at unaltered content. But they are just starting to implement this. Currently, Content Credentials are available in a few apps such as Photoshop but the feature is still in beta in other apps such as Lightroom.

Figure 10. Reviewing the Content Credentials of an image exported from Photoshop and then uploaded to the Verify service

For more information, see this post.

Commercially safe: Ethically sourced content

Generative AI works well only when it’s trained to recognize successful solutions using a large set of sample content. Many are concerned about whether a service’s generative AI was trained on images where proper usage rights have been secured, instead of simply “scraping” the web to pick up any images it finds. Adobe claims that Adobe Firefly uses “ethically sourced” content: imagery from Adobe Stock (meaning Adobe has secured appropriate rights) or that is legally in the public domain (which is not the same thing as an image being publicly viewable).

To demonstrate confidence that Adobe Firefly can be used without fear of subjecting an employer or client to intellectual property (IP) lawsuits, Adobe offers to pay the legal defense fees for enterprise customers if their contract with Adobe includes a Firefly indemnification option. Naturally it’s subject to conditions; for more information, you can read the Firefly Legal FAQs for Enterprise Customers (PDF).

Credit where credit’s due: Firefly Contributor Bonus

Another frequently discussed issue is whether creators are ever compensated for their work being used as training data for generative AI models. Adobe has introduced the Firefly Contributor Bonus, which issues payments to Adobe Stock contributors whose content was used to train the Firefly model. For more information, see the Firefly FAQ for Adobe Stock Contributors.

The invisible hand on the throttle: Generative Credits

Generative AI may involve models that can be too large for users to download, and more processing power than many users have, so the more advanced generative AI features may run on powerful cloud servers. There is a cost to running these servers, so to keep generative AI practical, many services require some form of payment.

Creative Cloud users already pay a subscription fee that can be substantial (and rising), so thankfully, Adobe does not charge extra for using generative AI. However, to discourage excessive use, starting on November 1, 2023, Adobe will begin using Generative Credits, which give you access to priority processing. They work a little like a mobile data plan with a limit.

Every month, you may receive a certain number of Generative Credits. The exact number depends on your Creative Cloud plan. When you use a feature that involves generative AI, you use a Generative Credit. If you run out, you can still use generative AI, but it may take longer to process your request. If you need to guarantee priority processing before the month resets, you have the option to buy more credits (and keep your project on schedule).

For now, the number of features that use a Generative Credit is small. For Photoshop, it’s currently just Generative Fill and Generative Expand—not all AI features. Adobe is expected to adjust the details of how many Generative Credits you get depending on how usage patterns develop. For current information, see the Generative Credits FAQ, which also tells you which activities consume Generative Credits in Creative Cloud apps such as Illustrator and Express.

Processing the future with lessons from the past

As generative AI produces more convincing results at higher resolutions, many will be increasingly concerned that we can no longer believe the images we see. But some would say images and video were never fully trustworthy in the first place, because it was always possible to alter how you perceive a scene through in-camera choices such as composition, perspective, and timing. But generative AI takes it to an entirely new level.

In a way, I can see generative AI imaging restoring the mindset of the time before photography, when all human-made images were not exact representations, and people understood that. Now, as then, we’ll want to ask questions: Who created this image? Is it important that it be an exact representation, and if so, how do I find out if it is? What, and who, did they choose to add to and exclude from the image?

Generations to Come

The future of Creative Cloud is increasingly unfamiliar, yet also promising, for anyone originally trained to do creative graphics work in isolated desktop apps focused on print and maybe the web too. MAX 2023 shows Adobe’s vision for a future where your tools are available on any platform (desktop, mobile, web), can deliver to any medium (web/social media, video, print), and can help you collaborate with anyone, anywhere (through Share for Review and Invite to Edit). On top of all that, MAX 2023 showed some magical new tech that may help you accomplish practical tasks with the help of generative AI.

If Creative Cloud is like a train of creative apps, adding generative AI is like attaching a bullet-train locomotive to it, which is now pulling Creative Cloud from “what you see is what you get” to “what you see is what you wish” faster than anyone could have guessed a year ago. We may want to wait out some bumps and glitches, but as generative AI matures, it has the potential to become so entrenched that future designers will likely use generative AI as everyday production tools that to them will feel as unremarkable as today’s selection marquee.

Commenting is easier and faster when you're logged in!

Recommended for you

Hey, Buddy. Can You Spare a Layer?

A few empty layers can go a long way when placing layered Photoshop and Illustra...

Building Catalogs

Learn how to create high-performing catalogs by following these best-practice ti...