Faster and Easier: How Face Recognition Tools Are Evolving

People masking in Camera Raw and Lightroom, improved portrait modes, and advanced retouching tools are just some of the ways face-recognition tools are improving photo workflows.

In How Machine Learning Is Winning the Photographic Face Race, I pointed out how technology can not only recognize faces and facial features, but also edit those features individually. Nearly five years on, tools that take advantage of facial recognition are now embedded into our everyday workflows. It’s easier to apply natural-looking edits faster than ever. Here are a few examples.

People Masking in Adobe Camera Raw and Lightroom

When Adobe revamped the masking features in Adobe Camera Raw, Lightroom Classic, and Lightroom desktop, it went way beyond using machine learning to detect a subject or a sky. Now, when you open the Masking panel, the software scans your image for people, and calls them out individually.

You can then choose specific areas, such as face and body skin, hair, or even clothes, and create masks to edit just those areas. Once the masks are made, use the suite of editing controls to adjust tone, color, or texture; for instance, an easy way to smooth skin in a natural-looking way is to make a mask for the skin and reduce the Texture value. Or use the Point Color tool to balance skin areas that are more flush than others.

These are especially helpful when you’re editing a series of photos from the same session. You can make face-specific adjustments to one image, then copy them to others or create an adaptive preset to apply to the entire set. Even if the subjects change position within the frame from shot to shot, the software identifies the facial features in each one and applies the edits consistently. That alone can save you hours of work on large projects.

What’s key is that these features are now rolled into the apps’ common set of tools, not requiring a plug-in or separate purchase.

Portrait Modes

The Portrait mode in your smartphone has also come a long way over the last few years. Since phone cameras are small and feature fixed apertures, it was difficult to get that creamy, blurry bokeh background that is common with professional portraits. To compensate, they used facial- and subject-recognition to identify the likely subject of a photo and then blur the background artificially. Unfortunately, the results looked just that: artificial.

Now, if you own a recent phone model, its camera does a much better job of simulating a natural soft background. More important, you can adjust the amount of blur later, either on the phone or in software, because the image includes a depth map to estimate the depth of the scene. The blur level can be adjusted, and the edge between subject and background is usually not as apparent. (Tip: reducing the amount of blur almost always makes the effect look more realistic.)

This feature applies even for photos captured with traditional cameras that don’t create depth maps. The new Lens Blur tool in Adobe Camera Raw and the Lightroom apps scans the image and builds its own depth information, which you can then edit. Other apps such as Luminar Neo can also soften the background.

Is it the same as capturing an image with a nice f/1.4 lens? Usually no. But when you need to add some separation between subject and background, or de-emphasize a background element in the frame, the feature can work well.

Advanced Retouching Tools

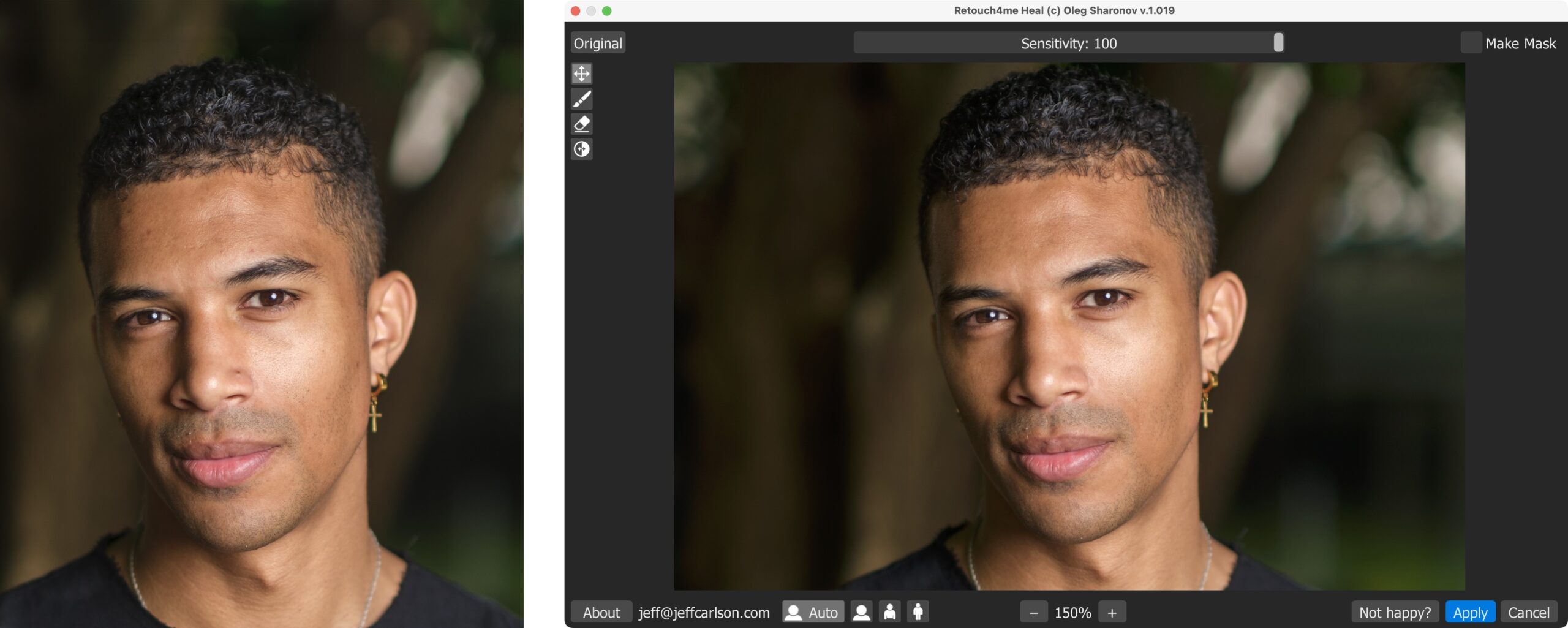

Of course there’s still a place for advanced retouching, and machine learning is making that process faster and easier. Retouch4Me sells several Photoshop plug-ins that tackle specific retouching tasks. For example, Retouch4Me Heal scans a face and removes spots and blemishes in one pass so you don’t need to click every single one.

And it’s not just facial features. Retouch4Me Fabric can smooth out wrinkles you missed or forgot to iron before the photoshoot.

As another example, Evoto is an AI-based app that performs portrait edits ranging from blemish removal and digital makeup to sophisticated facial and body sculpting.

The Assistant You Didn’t Think You Needed

In all of these examples, machine learning is accomplishing tasks that used to involve a lot more time and manual effort, because it understands what’s in the images: identifying things such as facial features and clothing. At the same time, the tools are not inventing pixels the way many generative AI tools do—you’re still in charge, but with a virtual assistant to handle the editing grunt work quickly.

This article was last modified on February 23, 2024

This article was first published on February 23, 2024

Commenting is easier and faster when you're logged in!

Recommended for you

Working with Albums and Collections in Lightroom, Part 1

How to group photos into discrete containers for easy access or sharing in Adobe...

How to Shoot Raw Photos on Your iPhone (and Why You’d Want To)

You don’t need a DLSR camera to reap the benefits of the raw image format.

Adobe Lightroom Mobile Comes to Android. Sort Of.

What was that loud rumbling noise I heard last week? That was the collective...