Adobe Firefly + Generative Fill

Adobe takes the next step in building Generative AI features into its offerings.

This article appears in Issue 21 of CreativePro Magazine.

It’s a safe bet that 2023 will be remembered as the year Generative AI hit the mainstream. One major reason for that is Firefly, Adobe’s new automated image-generation technology. To ensure Firefly gets maximum exposure right out of the gate, Adobe has made it publicly available at firefly.adobe.com and baked the technology into a beta version of Photoshop with the Generative Fill feature. In this article, we’ll explore what you can do with both.

Firefly on the Web

Let’s begin with a look at what you can do (and will be able to do in the future) with the web version of Firefly. There are few tools to learn. Indeed, for many operations you don’t need any tools at all, as text prompts are enough to get you started creating fantasy images.

Text to Image

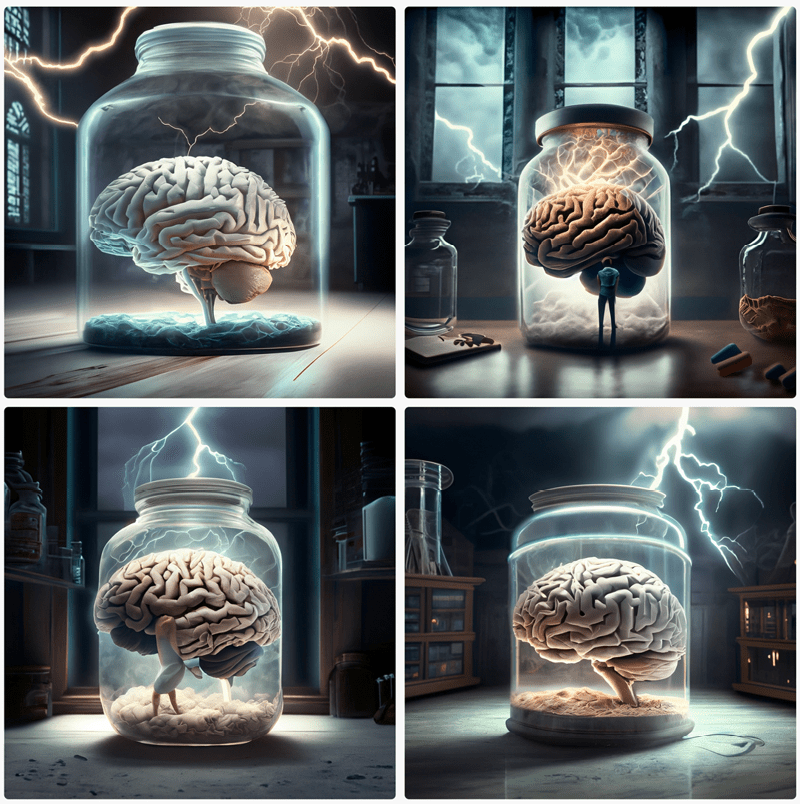

As the name implies, with Text to Image all you have to do is tell Firefly what you want to create, and it will do all the work for you. But before you immediately start typing, pause for a moment and think of how to describe your desired image precisely and in detail. The more detailed your text prompt, the more likely Firefly is to produce something like what you had in mind. The prompt for Figure 1 was brain in a jar in a dark castle laboratory with lightning outside the window. Firefly produces four results for you to choose from, though you can always ask for more if none of the first batch hits the mark.

Figure 1. A brain in a jar, courtesy of Adobe Firefly

was clearly some issue with the idea of the lightning being outside the window rather than in the room. One of the images has a person standing in the jar; another has what appears to be a mutant goose. But the overall impression in all four cases is striking. Firefly got the mood right, but not some of the details. If you make the prompt more detailed, you’re likely to get even better results. Figure 2 shows the result of asking for highly detailed retro 3D printer on wooden desk in dusty study at twilight.

Figure 2. Imaginative renderings of a retro 3D printer

Figure 3. A dog wearing a crown, sitting on a cloud

Figure 4. Layered paper animals in a forest

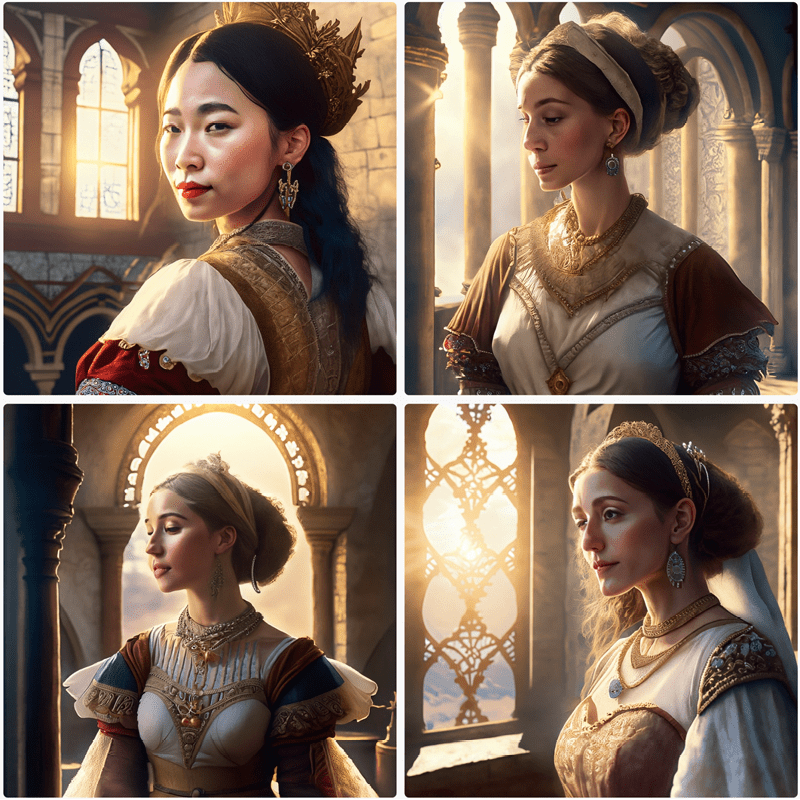

Figure 5. Firefly can produce painterly images of people, but not photographic ones.

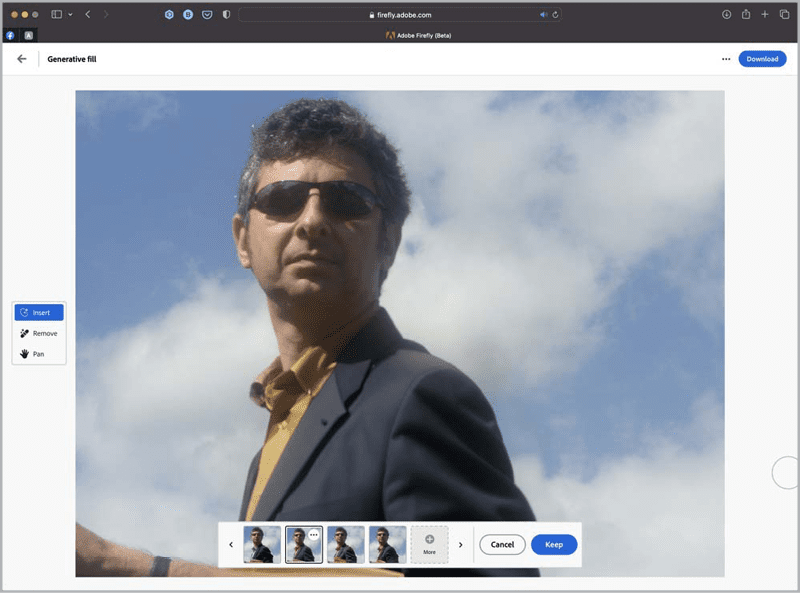

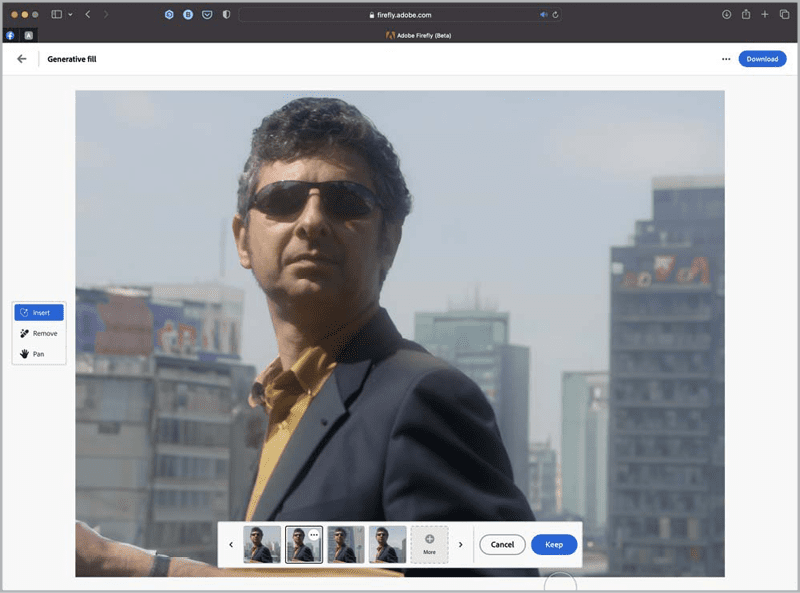

Generative Fill

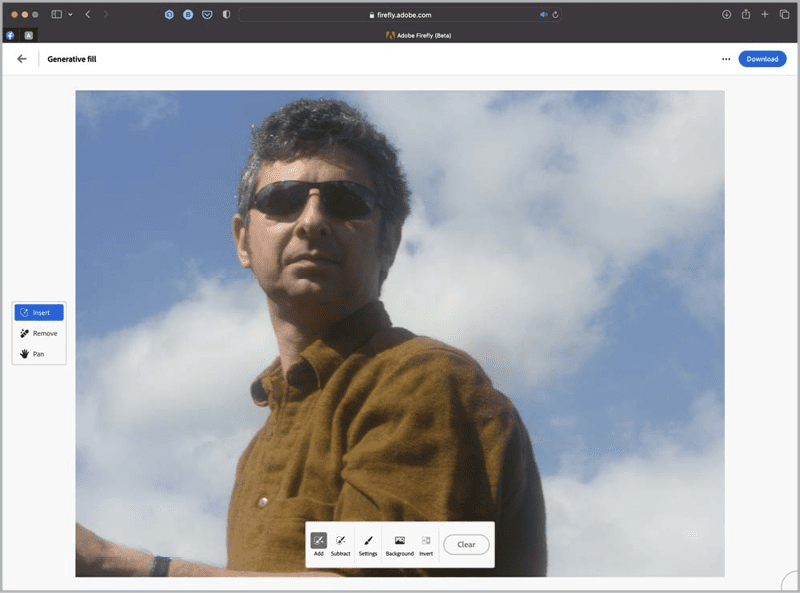

As well as creating entire images from scratch, Firefly can modify existing images with text prompts. In this first example, I started with this image of myself on a sunny day (Figure 6).

Figure 6. Steve Caplin on a sunny day

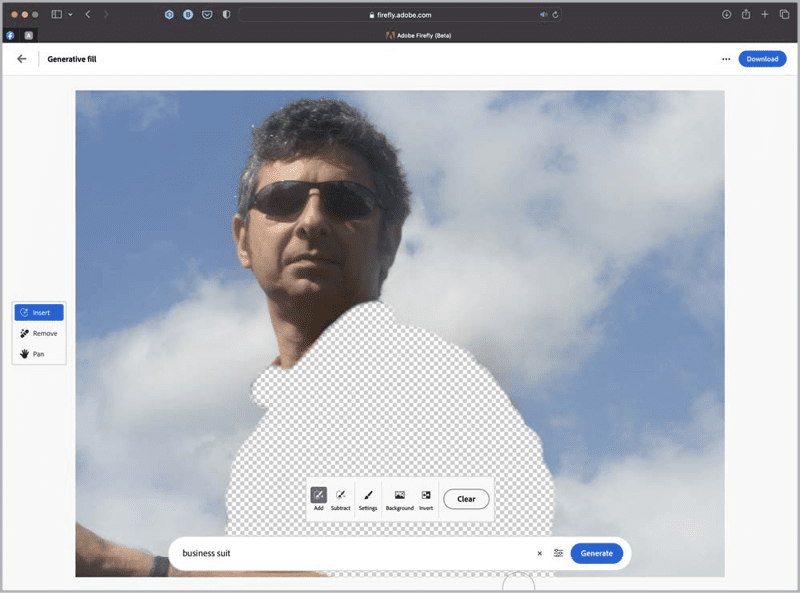

Figure 7. The shirt area selected, shown as a checkerboard pattern

Figure 8. A convincing business suit, with appropriate shadows

Figure 9. The clouds replaced with a cityscape

Text Effects

Another tool in Firefly’s toolbox is Text Effects, which creates text based on the prompt you supply. You can choose the font from a menu of 16 (including Acumin Pro as well as four other sans-serif fonts, three serif fonts, and a few display fonts). To have Firefly generate text effects, simply enter the word(s) you want to use and describe the desired style. The results are impressive: Figure 10 and Figure 11 show spring flower and foil balloon effects, which are rendered as transparent PNG files so you can place them over the background of your choice.

Figure 10. Spring, rendered as spring flowers

Figure 11. Foil balloon lettering

Generative Recolor

Perhaps the least impressive tool, at least for now, is Generative Recolor. As the name suggests, this recolors vector artwork based on your text prompts. The artwork must be saved as an SVG file first, which means an extra step in Illustrator before you can proceed. The results are often odd and not very useful, with colors shifted almost randomly. It’s hard to see how my drawing of a pirate (Figure 12) has been significantly improved in the process (Figure 13).

Figure 12.

My drawing of a pirate…

Figure 13. …recolored—

but so what?

Firefly further

A number of additional Firefly technologies are forthcoming. The details are unknown at this point, because each one is referred to on the website with an image and a brief text description only. They include the following: Extend Image, which is available now in the beta version of Photoshop, as we’ll see later on Text to Brush, which generates Photoshop brushes Text to Pattern, which creates seamless tiled patterns 3D to Image, which aims to create textured objects from uploaded 3D models—and which could be a real game changer for 3D artists Text to Vector, which creates editable vector artwork from a text prompt Sketch to Image, which allows users to upload line art drawings and have them turned into full-color painterly images All these putative tools are labeled as “in exploration,” with no anticipated arrival date. But if they perform as well as the sample images suggest, it’s definitely worth keeping an eye on the Firefly website to watch out for their appearance.

Photoshop + Firefly

With the meteoric rise of AI-generated images, you might be concerned that traditional Photoshop artists are being left behind. But that’s all set to change with the release of the first public beta of Photoshop to include its Firefly engine, which generates imagery to order, via the Generative Fill feature. As with all beta software, Firefly in Photoshop is not yet ready for prime time. Indeed, Adobe’s GenAI User Guidelines specifically prohibit its use for commercial work while it’s in beta. In the meantime, here’s what it can do for you.

Outfilling

We’re all used to the Content-Aware Fill, which does a reasonable job of extending and reshaping images. But now, Photoshop’s new Generative Fill feature (powered by the same GenAI as Firefly) takes that to a whole new level. In Figure 14, a portrait shot of a street scene has been extended to the left by widening the canvas with the Crop tool.

Figure 14. The original street, with the canvas extended to the left

Figure 15. Two views of the extended street

Figure 16. The extended street, with generated trees

Adding a Text Prompt

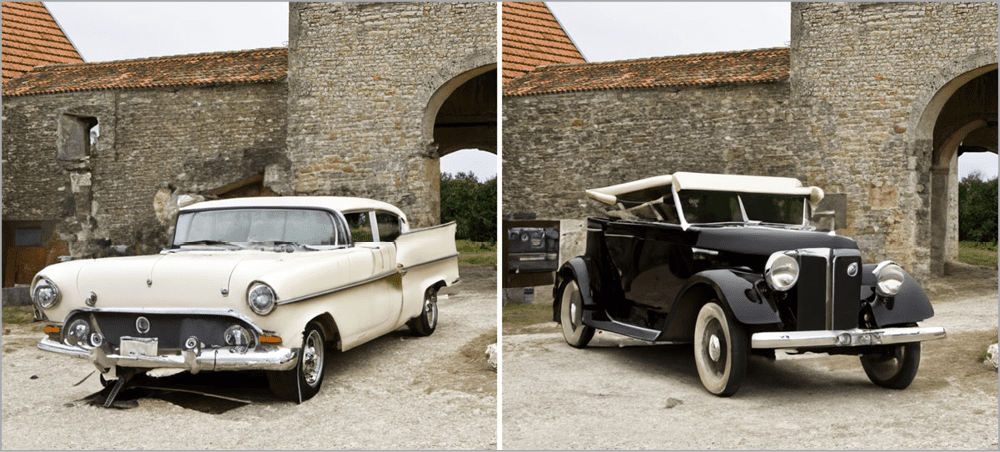

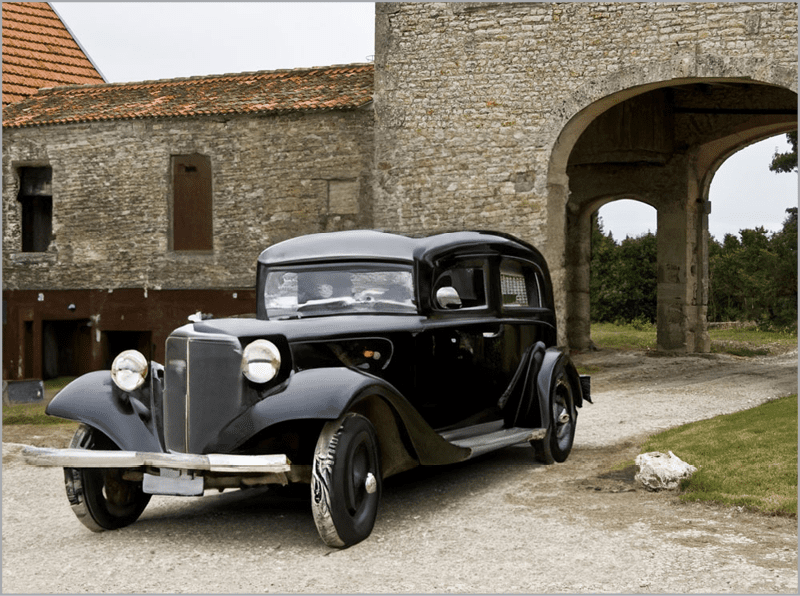

You can choose to fill a selected area with literally anything you can imagine. In the example in Figure 17, I started with a view of an ancient farmyard.

Figure 17. A farmyard with an empty space in front of it

Figure 18. Two failed cars

Figure 19. The car, in perfect perspective for the scene

Figure 20. A seated figure really does sit on the running board.

Figure 21. The added hay wagon

Figure 22. The hay wagon layer masked to reveal the woman’s face again.

Figure 23. The puddle produces largely accurate reflections.

Object Removal

Some versions ago Photoshop introduced Content-Aware Fill, which enables objects in a scene to be replaced by texture sampled from the image. Generative Fill takes this process to a whole new level. In this example (Figure 24), I wanted to remove the line of cars in front of the building.

Figure 24. The line of cars spoils our view of this building.

Figure 25. The cars, gone in an instant.

Horsing Around (with Mediocre Results)

You can try to produce photographic images of animals, although the results are often questionable at best. The image shown in Figure 26, of an abandoned mine entrance makes the perfect backdrop to demonstrate. I started by making a rectangular selection where I wanted the animal to appear.

Figure 26. The original mine entrance

Figure 27. An acceptable horse and cart

Figure 28. Oops! There are somewhat bizarre results in this horse and cart version.

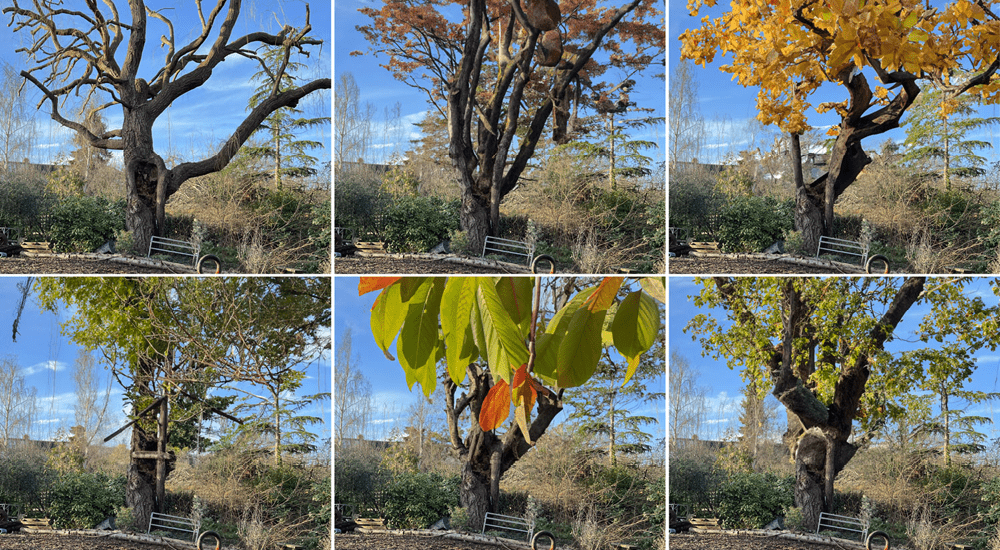

Getting It Wrong

Although Generative Fill is undeniably powerful, there are times when it simply refuses to produce the results you want. In Figure 29, I asked Photoshop to add leaves to the tree (top left). None of the results are ideal, and some of them are downright weird.

Figure 29. Adding leaves to a tree seems beyond Photoshop’s present capabilities.

Figure 30. A prompt for a man in a deckchair produces some scary results.

Figure 31. The empty street scene

Figure 32. Love the speeding car, but who asked for a bush behind it?

Why Can’t Photoshop Create Convincing People?

There are two sorts of artificial intelligence. Standard AI is what allows your car to know when another car is coming towards it and to dim the headlights. It’s a job it does well, but you can’t ask it to render the plot of Moby Dick as a haiku. The second sort, Artificial General Intelligence (AGI), aims to allow computers to do anything a human can do. It uses what’s called a Large Language Model (LLM) and works on the basis of the most likely words to appear in any given context. So, if you say I’m going to take the kids to… then the word that follows is more likely to be Disneyland than, say, cauliflower or Iceland. This is a trivial example; but by now it’s probable that LLMs have read almost everything ever written. This enables ChatGPT and the like to produce text on just about any subject. The results may or may not bear any resemblance to reality, but they almost always sound convincing. Image creation tools also use LLMs: They use a visual language, of course, but the process is the same. And tools such as Midjourney have consumed many millions of images from around the internet, which enables them to create thoroughly realistic synthetic images to illustrate just about anything you ask for. So, more is better, right? Well, up to a point. The reason image generators are so good is that they’re indiscriminate about where they source their training material—as evidenced recently, when Getty Images sued Stable Diffusion for poaching 12 million of their copyrighted photographs in order to create new images. How did they know? Because many of the images produced by Stable Diffusion included the Getty Images watermark, as Figure 33 shows.

Figure 33. Evidence from the Getty Images lawsuit against Stable Diffusion: The original Getty image (left) is reworked by Stable Diffusion (right), but the watermark is also retained.

Firefly Takes Flight

Unlike using the other AI image-generation tools, working directly in Photoshop means we can integrate generated results with our own images and choose exactly where the new elements appear and then use standard Photoshop tools to modify them. The Firefly website allows you to create entirely new images from mere text prompts. Together, it’s like having an infinite, free photo library at your fingertips. Where this leaves traditional Photoshop artists, illustrators, and photographers, though, is another question. Are the skills we’ve painstakingly learned over our careers now becoming obsolete? Or are these just new tools that we can learn to master and turn to our advantage? One thing is certain: The gap between inspiration and execution is getting shorter by the day. Stay tuned!

Commenting is easier and faster when you're logged in!

Recommended for you

Tip of the Week: Using Power Zoom

There are three new Links panel icons in InDesign CC that you'll see when workin...

A Toolbox of Free Scripts

It’s always fun to stumble across some useful new resources for InDesign. Recent...

InReview: Zevrix Output Factory 2

A high-end solution for automating the printing and exporting of your InDesign f...